Clinical trials are essential for discovering new treatments and ensuring they are safe and effective. At the heart of these trials is statistical analysis, a critical process that helps researchers interpret data accurately.

This article aims to explain the key aspects of statistical analysis in clinical trials, offering a clear and detailed guide to various methodologies such as sequential analysis, hierarchical models, and Bayesian methods. We’ll also explore the importance of maintaining statistical integrity, how to manage common challenges like missing data, and the impact of events like the COVID-19 pandemic on trial practices.

Whether you’re a researcher, clinician, or student, this guide will provide you with the knowledge needed to understand and apply statistical techniques in clinical trials, ensuring your findings are reliable and impactful.

Key Statistical Approaches in Clinical Trials

Sequential Analysis

Sequential analysis is a powerful technique used to evaluate data as it is collected throughout the clinical trial, rather than waiting until the end of the study. This approach allows for more flexible and ethical decision-making, enabling researchers to stop a trial early if a treatment is found to be significantly effective or harmful.

- Early Stopping Rules: Sequential analysis uses pre-specified rules to determine when to stop the trial early. These rules help to ensure that decisions are made objectively and are not influenced by interim data. The use of interim analysis in sequential trials is crucial for ethical considerations, ensuring participants are not subjected to ineffective or harmful treatments longer than necessary.

- Efficiency and Ethics: By potentially reducing the duration of the trial, sequential analysis can save resources and reduce the exposure of participants to potentially ineffective or harmful treatments. This approach aligns with the principles of ethical clinical practice by minimizing the risk to participants.

Hierarchical Models

Hierarchical models, also known as multi-level models, are statistical techniques that handle data with a nested structure. For instance, patients may be nested within different clinics, or measurements may be nested within patients.

- Variance Partitioning: Hierarchical models allow for the partitioning of variance at different levels, improving the accuracy of estimates and providing more detailed insights into the data. These models are particularly useful in randomized trials and observational studies where data is collected across multiple levels or groups.

- Flexibility: These models are flexible and can accommodate complex data structures, making them particularly useful in multi-center clinical trials. Hierarchical models can also handle variability in measurement and subject characteristics, ensuring more reliable results.

Bayesian Analysis

Bayesian analysis incorporates prior information along with current data to update the probability of a hypothesis being true. This method is particularly advantageous in clinical trials where prior knowledge exists and can be used to inform the analysis.

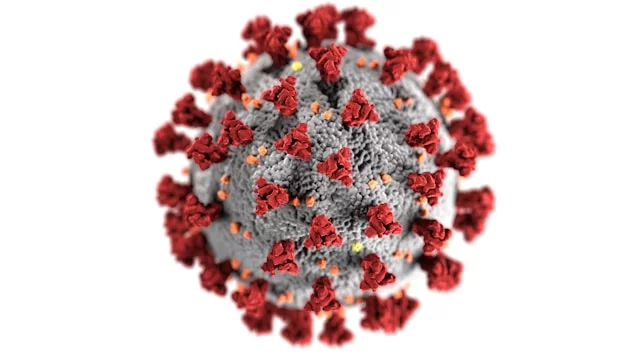

- Incorporation of Prior Information: Bayesian methods allow the inclusion of previous studies or expert opinions, which can improve the efficiency of the trial. For example, in trials assessing new treatments for COVID-19 infections, prior data from earlier studies can be integrated to enhance current trial designs. The European Medicines Agency (EMA) provides guidelines on using Bayesian methods in clinical trials.

- Dynamic Updating: As new data is collected, Bayesian analysis updates the probability estimates, allowing for continuous learning and adaptation. This is especially useful in adaptive analysis strategies where trial designs evolve based on interim results.

Meta-Analysis

Meta-analysis combines data from multiple studies to provide a comprehensive assessment of the treatment effect. This approach increases statistical power and generalizability, offering broader insights than individual studies alone.

- Enhanced Power: By pooling data, meta-analysis increases the sample size, thereby enhancing the statistical power to detect treatment effects. This is particularly important in studies with small sample sizes or rare outcomes.

- Broader Insights: This method provides a more comprehensive understanding of the treatment’s efficacy and safety across different populations and settings. Meta-analyses often employ advanced statistical models, such as random effects models, to account for variability between studies.

Importance of Statistical Integrity

Ensuring Robustness in Results

Statistical integrity is crucial to ensuring the reliability and validity of clinical trial results. Robustness in statistical analysis refers to the consistency and reliability of the results under various conditions.

- Reproducibility: Ensuring that the results can be reproduced in different settings or with different data sets is a key aspect of robustness. This includes replicating findings in future studies to confirm the treatment’s effects.

- Sensitivity Analyses: Conducting sensitivity analyses to test the robustness of the results against various assumptions and potential sources of bias. For example, sensitivity analyses can assess how missing data or different analysis models impact the study’s conclusions.

Managing Bias and Variability

Bias and variability are inherent challenges in clinical trials that can significantly impact the validity of the results.

- Randomization: Proper randomization helps to balance known and unknown confounders between treatment groups, minimizing bias. This is a fundamental aspect of randomized trials to ensure comparable groups.

- Blinding: Blinding participants and investigators to the treatment allocation reduces the potential for bias in outcome assessment. Double-blind designs are particularly effective in minimizing subjective influences on the results.

The Impact of COVID-19 on Statistical Practices

The COVID-19 pandemic has significantly impacted the conduct and analysis of clinical trials, introducing new challenges and necessitating adaptations in statistical practices.

- Remote Monitoring: The need for social distancing has led to the increased use of remote monitoring techniques, which requires adjustments in data collection and analysis methods. This shift necessitates robust data management systems to ensure data integrity. FDA provides guidelines on conducting clinical trials during the pandemic.

- Data Variability: The pandemic has introduced additional variability in data due to factors such as changes in healthcare delivery and patient behavior. Trials must adapt their analysis approach to account for these variations.

Risk Assessment and Management

Risk assessment in clinical trials involves identifying and evaluating potential risks to ensure the safety of participants and the integrity of the data.

- Risk-Based Monitoring: Implementing a risk-based monitoring approach helps prioritize resources and focus on critical data and processes. This method aligns with the principles of the Institute of Medicine’s recommendations for efficient trial designs.

- Proactive Risk Management: Identifying potential risks early and implementing mitigation strategies to address them proactively. This includes monitoring adverse events and adjusting the trial protocol as necessary to ensure participant safety.

Handling Intercurrent Events

Intercurrent events are events that occur after treatment initiation and can affect the interpretation of the trial results.

- Defining Intercurrent Events: Clearly defining intercurrent events and their potential impact on the trial outcomes. These events can include discontinuation of treatment, introduction of new therapies, or adverse events.

- Strategies for Handling: Implementing appropriate statistical methods to handle intercurrent events, such as censoring or adjusting for these events in the analysis. For example, survival analysis techniques can account for time-to-event data and intercurrent events.

Managing Missing Data

Missing data is a common issue in clinical trials that can lead to biased results if not handled appropriately.

- Imputation Methods: Using methods such as multiple imputation to estimate missing values based on the observed data. This approach helps maintain the statistical power and validity of the study findings.

- Sensitivity Analyses: Conducting sensitivity analyses to assess the impact of different assumptions about the missing data on the study results. This helps to ensure that the conclusions are robust and not overly dependent on how missing data is handled.

Pre-SPEC Framework

Definition and Importance

The Pre-SPEC (Pre-Specified) framework involves defining the statistical analysis plan before the trial begins to ensure transparency and reduce bias.

- Transparency: Pre-specifying the analysis plan ensures that all stakeholders are aware of the planned analyses, reducing the potential for post hoc data manipulation. This is critical for maintaining the integrity and credibility of clinical trials. Clinical Trials Transformation Initiative (CTTI) provides best practices for pre-specification.

- Regulatory Compliance: Adhering to pre-specified plans is often a regulatory requirement, enhancing the credibility of the trial results. Regulatory agencies like the European Medicines Agency (EMA) emphasize the importance of pre-specified analysis plans.

Advantages of Pre-Specifying Analysis Plans

Pre-specifying analysis plans offers several advantages, including increased credibility and reduced potential for bias.

- Objectivity: Ensures that the analyses are conducted objectively, based on predefined criteria rather than being influenced by the observed data. This approach helps avoid selective reporting of positive findings.

- Consistency: Promotes consistency in the analysis, making it easier to compare results across different studies. This is particularly important in meta-analyses and systematic reviews.

Key Statistical Techniques

Key statistical techniques used in the Pre-SPEC framework include power analysis, multiple comparisons adjustments, and adaptive analysis strategies.

- Power Analysis: Conducting power analysis to determine the appropriate sample size needed to detect a clinically significant effect. This ensures that the study is adequately powered to detect meaningful differences. Learn more about power analysis on Harvard Catalyst.

- Multiple Comparisons Adjustments: Adjusting for multiple comparisons to control the family-wise error rate and reduce the risk of false positives. Techniques such as the Bonferroni correction and False Discovery Rate (FDR) control are commonly used.

- Adaptive Analysis Strategies: Using adaptive analysis strategies to modify the trial design based on interim data without compromising the integrity of the trial. These strategies include group sequential designs and sample size re-estimation.

Power Analysis

Power analysis is a crucial step in the design of clinical trials, ensuring that the study has enough power to detect a clinically significant effect.

- Sample Size Determination: Calculating the sample size required to achieve a specified level of statistical power, typically 80% or 90%. This involves considering the expected effect size, standard deviation, and desired significance level.

- Effect Size: Estimating the expected effect size based on previous studies or pilot data. Accurate estimation of the effect size is crucial for determining the necessary sample size.

Multiple Comparisons Adjustments

When multiple comparisons are made in a clinical trial, the risk of false positives increases. Multiple comparisons adjustments are used to control this risk.

- Bonferroni Correction: A simple and conservative method to adjust for multiple comparisons by dividing the significance level by the number of tests. While effective, it can be overly conservative and reduce statistical power.

- False Discovery Rate (FDR): A more flexible approach that controls the expected proportion of false positives among the rejected hypotheses. FDR methods, such as the Benjamini-Hochberg procedure, balance the need to control false positives while maintaining statistical power.

Adaptive Analysis Strategies

Adaptive analysis strategies allow for modifications to the trial design based on interim data without compromising the trial’s integrity.

- Group Sequential Designs: Allow for multiple interim analyses with the possibility of stopping the trial early for efficacy or futility. This approach helps ensure that participants are not exposed to ineffective treatments longer than necessary.

- Sample Size Re-estimation: Adjusting the sample size based on interim results to ensure the trial has adequate power. This strategy helps address uncertainties in initial sample size calculations.

Safety Analyses in Clinical Trials

Importance of Safety Evaluations

Safety evaluations are a critical component of clinical trials, ensuring that the treatment does not pose undue risks to participants.

- Adverse Event Monitoring: Continuously monitoring and reporting adverse events to assess the treatment’s safety profile. This is essential for ensuring participant safety and informing regulatory decisions.

- Safety Endpoints: Pre-defining safety endpoints and incorporating them into the analysis plan. This helps ensure that safety considerations are systematically evaluated and reported.

Interim Evaluations and Their Implications

Interim evaluations involve analyzing data at predefined points during the trial to make decisions about its continuation.

- Stopping for Safety: Interim evaluations may lead to the early termination of the trial if significant safety concerns are identified. This approach ensures that participants are not exposed to unnecessary risks.

- Data Monitoring Committees: Independent committees that review interim data to make recommendations about the trial’s continuation or modification. These committees play a crucial role in maintaining the integrity and safety of the trial.

Challenges in Statistical Analysis

Complexity of Data Handling

Handling complex and large datasets is a significant challenge in clinical trials, requiring advanced statistical techniques and robust data management systems.

- Data Integration: Integrating data from multiple sources and formats can be complex and requires careful planning and execution. This is especially true in trials involving multiple sites or electronic health records.

- Quality Control: Implementing stringent quality control measures to ensure the accuracy and completeness of the data. This includes regular audits and validation checks.

Incorporating Real-World Evidence

Incorporating real-world evidence (RWE) into clinical trials can enhance the generalizability of the results but presents unique challenges.

- Data Heterogeneity: RWE often comes from diverse sources with varying data quality and completeness. Addressing these variations requires sophisticated statistical models and careful data curation.

- Analytical Methods: Developing appropriate analytical methods to handle the variability and complexity of real-world data. Techniques such as propensity score matching and sensitivity analyses are often employed to address confounding and bias.

For a more detailed exploration of medical statistics, you can refer to our guide to medical statistics.

Conclusion

Statistical analysis is crucial in clinical trials, enabling researchers to draw valid and reliable conclusions about new treatments. By understanding and applying key statistical methodologies, researchers can ensure their findings are credible and impactful. This, in turn, contributes significantly to the advancement of medical knowledge and the improvement of patient care.

FAQs

Statistical analysis in clinical trials involves the application of statistical methods to evaluate and interpret data collected during the trial. It helps determine whether observed effects are due to the treatment being tested or random variation. More information can be found on ClinicalTrials.gov.

The five basic methods of statistical analysis in clinical trials include:

Descriptive Statistics: Summarizing data using measures such as mean, median, and standard deviation.

Inferential Statistics: Drawing conclusions from sample data to make inferences about a population.

Regression Analysis: Examining relationships between variables.

Survival Analysis: Analyzing time-to-event data.

Bayesian Methods: Incorporating prior information and updating probability estimates with new data.

The five steps in statistical analysis are:

Data Collection: Gathering accurate and relevant data.

Data Processing: Cleaning and organizing data for analysis.

Exploratory Data Analysis: Using descriptive statistics to understand the data.

Statistical Testing: Applying appropriate statistical tests to draw inferences.

Interpretation: Interpreting the results and making conclusions.

In clinical trials, a result is considered statistically significant if the p-value is less than a pre-specified threshold (usually 0.05), indicating that the observed effect is unlikely to be due to chance. This signifies that there is strong evidence against the null hypothesis.

Conducting power analysis ensures that the trial has an adequate sample size to detect a clinically significant effect, avoiding Type II errors and providing reliable results.

Missing data can bias results if not handled properly. Approaches such as imputation methods and sensitivity analyses help address missing data and assess its impact on study outcomes.